Farhadur Reza research interest include high-performance many-core architecture, energy efficient networks- on-chip, resource management and optimization, and machine learning techniques.

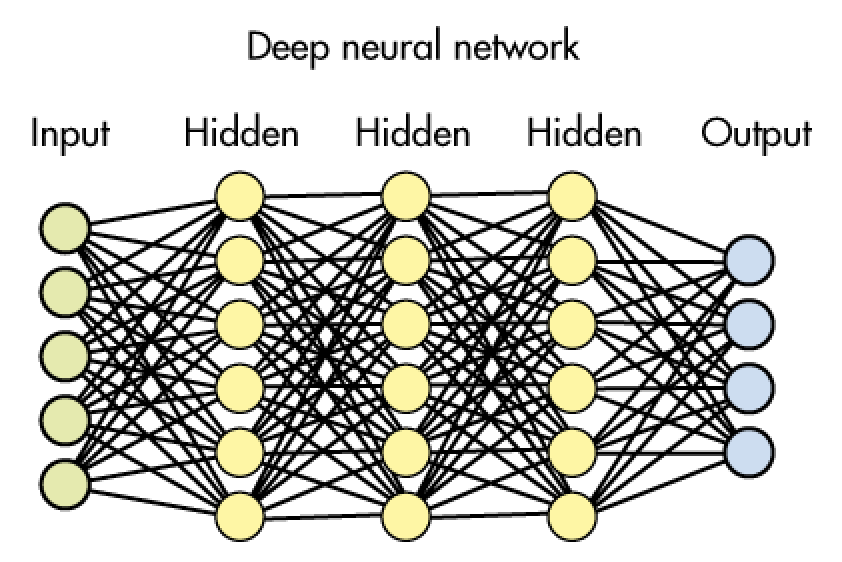

We rely on computing in the design of systems for energy, transportation, finance, education, health, defense, entertainment, and overall wellness. However, today's computing systems are facing major challenges both at the technology and application levels. At the technology level, traditional scaling of device sizes has slowed down and the reduction of cost per transistor is plateauing, making it increasingly difficult to extract more computer performance by employing more transistors on-chip. Power limits and reduced semiconductor reliability are making device scaling more difficult – if not impossible – to leverage for performance in the future and across all platforms, including mobile, embedded systems, laptops, servers, and datacenters. Simultaneously, at the application level, we are entering a new computing era that calls for a migration from an algorithm computing world to a learning-based, data-intensive computing paradigm in which human capabilities are scaled and magnified. To meet the ever-increasing computing needs and to overcome power density limitations, the computing industry has embraced parallelism (parallel computing) as the only method for improving computer performance. Today, computing systems are being designed with tens to hundreds of computing cores integrated into a single chip and hundreds to thousands of computing servers based on these chips are connected in datacenters and supercomputers. However, power consumption remains a significant design problem, and such highly parallel systems still face major challenges in terms of energy efficiency, performance, and reliability.

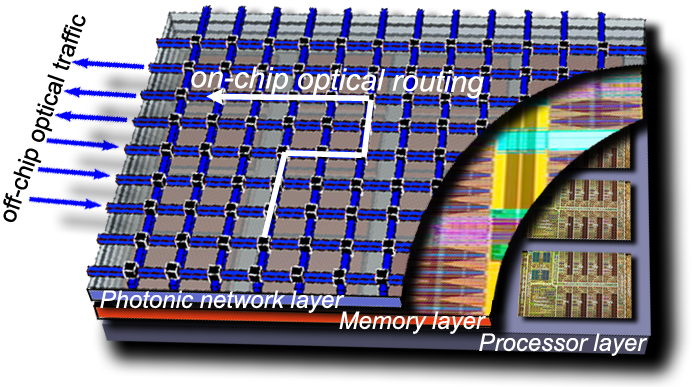

Professor Louri and his team investigate novel parallel computer architectures and technologies which deliver high reliability, high performance, and energy-efficient solutions to important application domains and societal needs. The research has far-reaching impacts on the computing industry and society at large. Current research topics include: (1) the use of machine learning techniques for designing energy-efficient, reliable multicore architectures, (2) scalable accelerator-rich reconfigurable heterogeneous architectures, (3) emerging interconnect technologies (photonic, wireless, RF, hybrid) for network-on-chips (NoCs) & embedded systems, (4) future parallel computing models and architectures including Convolutional Neural Networks (CNNs), Deep Neural Networks (DNNs), near data computing, approximate computing, and (5) cloud and edge computing.

- Optical Multi-Mesh Hypercube Interconnection Network

- A Free-space Optical Crossbar Switch Using Wavelength Division Multiplexing and VSCELs

- Multi-Wavelength Optical Content Addressable Parallel Processor (MW-OCAPP)

- Optical Interconnects for the Design of Large Scale Symmetric Multiprocessors

- Reliable and Fault-Tolerant Network-on-Chips for Multi-Core Architectures and Embedded Systems

- Heterogenous Interconnect Technologies (Photonics, 3D Stacking, RF) for Scalable NoCs

- Optical Processing Techniques for High Speed Searching and Matching Applications

- Scalable Cache Coherent Scheme for Distributed Shared Memory Systems

- Optical Switching Fabrics for Scalable IP Routers

- Multi-Domain Modeling and Simulation of Optical Interconnects for Multiprocessor Application

- Design of Reconfigurable and Scalable Optical Interconnection Networks for Balanced Parallel Computing Systems

- Performance-Adaptive and Power-Aware Hybrid Opto-Electronic Interconnects for HPCs

- Nano-Photonics for Network on Chips

Copyright (c) 2017 - 2018, HPCAT; all rights reserved.